Apache Spark Tutorial Español Pdf

Apache Spark is a data analytics engine. Companies like Apple Cisco Juniper Network already use spark for various big Data projects.

Apache Spark Architecture Distributed System Architecture Explained Edureka

Geospatial Data Management in Apache Spark.

Apache spark tutorial español pdf. By using memory for persistent storage besides compute Apache Spark. 1 ApacheSpark101 LanceCoTingKeh SeniorSo5wareEngineerMachineLearningBox. Return to workplace and demo use of Spark.

It eliminated the need to combine multiple tools with their own challenges and learning curves. Apache Spark represents a revolutionary new approach that shatters the previously daunting barriers to designing developing and dis-tributing solutions capable of processing the colossal volumes of Big Data that enterprises are accumulating each day. By end of day participants will be comfortable with the following.

Apache Spark Tutorial Following are an overview of the concepts and examples that we shall go through in these Apache Spark Tutorials. Review of Spark SQL Spark Streaming MLlib. A developer should use it when she handles large amount of data which.

Web-based companies like Chinese search engine Baidu e-commerce opera-tion Alibaba Taobao and social networking company Tencent all run Spark-. Review advanced topics and BDAS projects. There are separate playlists for videos of different topics.

These accounts will remain open long enough for you to export your work. A Tutorial Jia Yu 1 Mohamed Sarwat 2 School of Computing Infomatics and Decision Systems Engineering Arizona State University 699 S Mill Avenue Tempe AZ 85281 1 jiayu2asuedu 3 msarwatasuedu AbstractThe volume of spatial data increases at a staggering rate. It has a thriving open-source community and is the most active Apache project at the moment.

We are excited to bring you the most complete resource on Apache Spark today focusing especially on the new generation of Spark APIs introduced in Spark 20. Apache Spark Tutorial in PDF - You can download the PDF of this wonderful tutorial by paying a nominal price of 999. 5 Zeppelin Tutorial part 2 Help Get started with Zeppelin documentation Community Please teel tree to help us to improve Zeppelin Any contribution are welcome.

20 ParallelCoIIectionRDDß at parallelize at int job. Welcome to this first edition of Spark. It allows working with RDD Resilient Distributed Dataset in Python.

Develop Spark apps for typical use cases. By end of day participants will be comfortable with the following. Apache Spark is a lightning-fast cluster computing designed for fast computation.

Apache Spark is currently one of. Developer community resources events etc. See the Apache Spark YouTube Channel for videos from Spark events.

Apache Spark is a high-performance open source framework for Big Data processingSpark is the preferred choice of many enterprises and is used in many large scale systems. Besides browsing through playlists you can also find direct links to videos below. In addition this page lists other resources for learning Spark.

Explore data sets loaded from HDFS etc. Developer community resources events etc. Open a Spark Shell.

To get started using Apache Spark as the motto Making Big Data Simple states. Return to workplace and demo use of Spark. Tour of the Spark API.

This is a brief tutorial that explains the basics of Spark Core programming. Apache Spark is an open-source cluster computing framework for real-time processing. It was built on top of Hadoop MapReduce and it extends the MapReduce model to efficiently use more types of computations which includes Interactive Queries and Stream Processing.

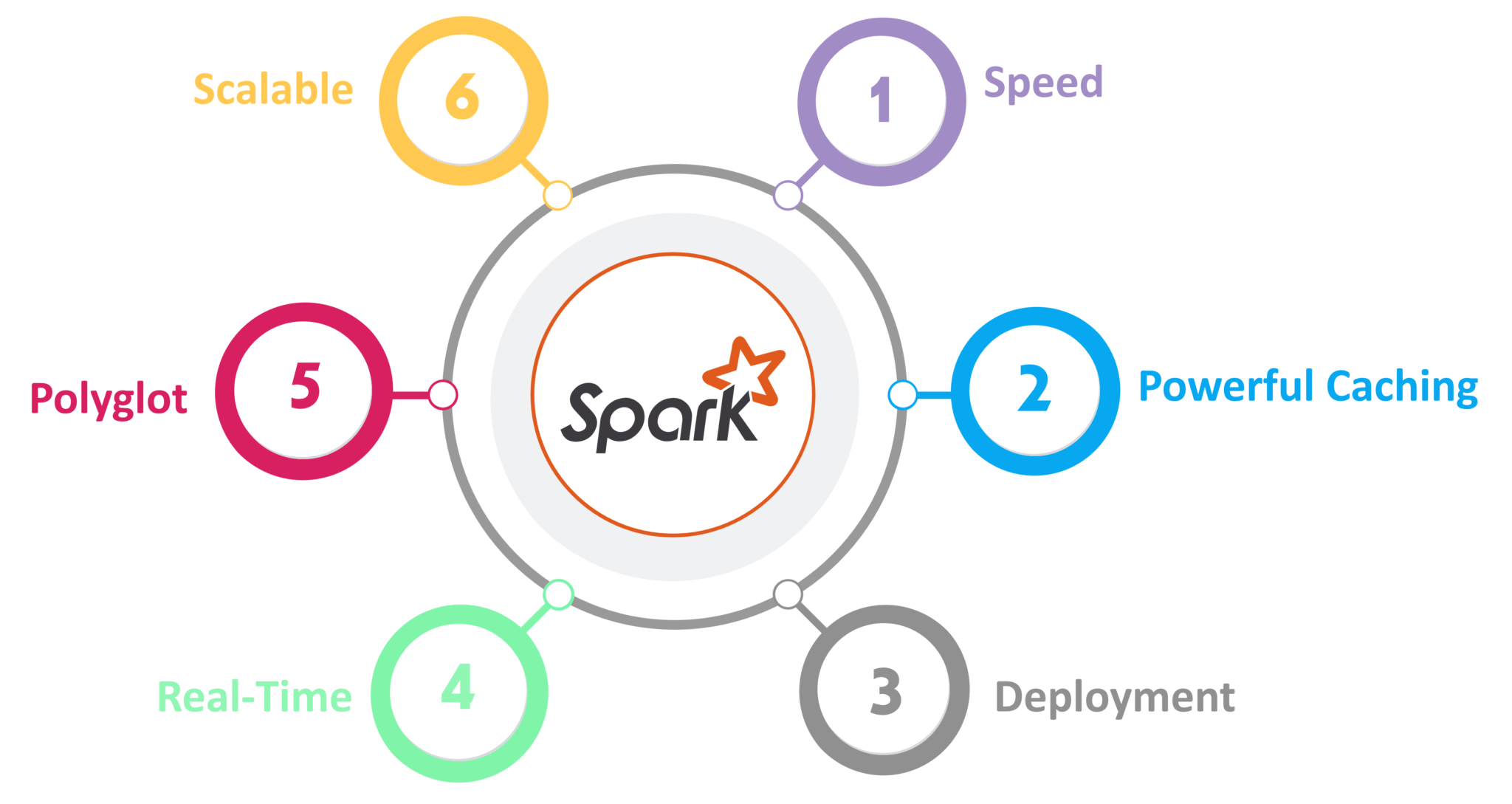

Review Spark SQL Spark Streaming Shark. Spark has versatile support for languages it supports. Spark provides an interface for programming entire clusters with implicit data parallelism and fault-tolerance.

Use of some ML algorithms. Time but when Apache Spark came it provided one single runtime to address all these challenges. Follow-up courses and certification.

Open a Spark Shell. What is Apache Spark. These series of Spark Tutorials deal with Apache Spark Basics and Libraries.

PySpark is a tool created by Apache Spark Community for using Python with Spark. Explore data sets loaded from HDFS etc. First Steps with Spark.

Apache Spark integrating it into their own products and contributing enhance-ments and extensions back to the Apache project. Spark MLlib GraphX Streaming SQL with detailed explaination and examples. Spark is the name engine to realize cluster computing while PySpark is Pythons library to use Spark.

Please create and run a variety of notebooks on your account throughout the tutorial. Your contribution will go a long way in helping. Getting started with apache-spark Remarks Apache Spark is an open source big data processing framework built around speed ease of use and sophisticated analytics.

It also offers PySpark Shell to link Python APIs with Spark core to initiate Spark Context. Follow-up courses and certification.

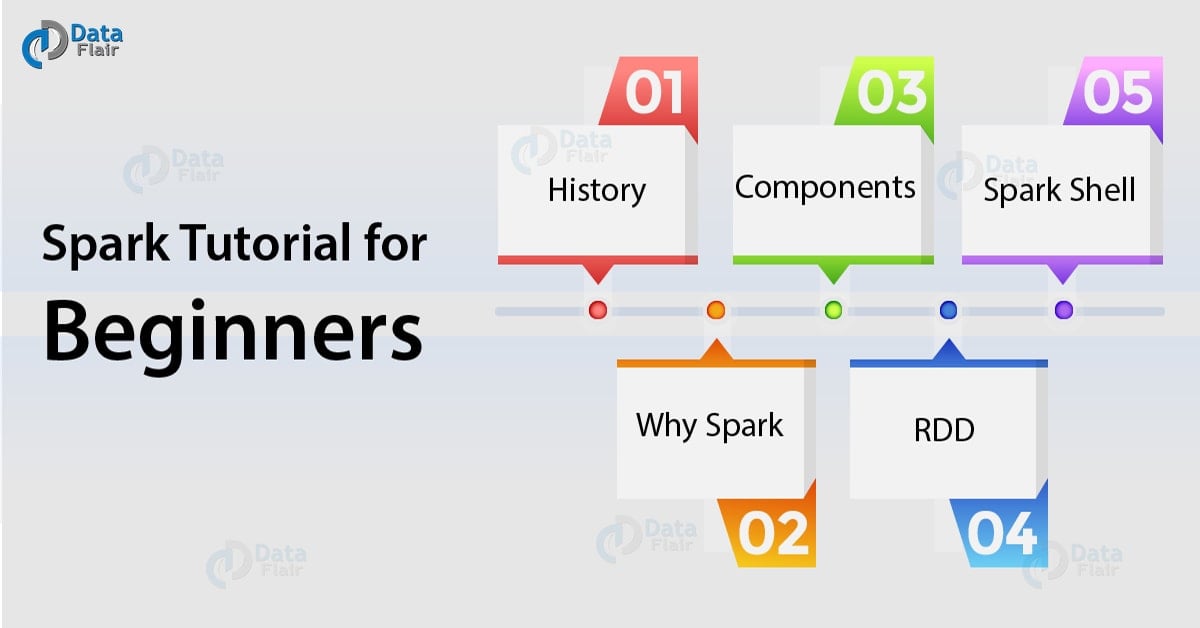

What Is Spark Apache Spark Tutorial For Beginners Dataflair

What Is Spark Apache Spark Tutorial For Beginners Dataflair

Membuat Dan Menjalankan Aplikasi Apache Spark Dengan Intellij Idea Pada Os Windows

Download Pdf Apache Spark 2x Machine Learning Cookbook Over 100 Recipes To Simplify Machine Learning Mo Apache Spark Machine Learning Models Machine Learning